How it Works

One of the fundamental and vital things about drawing a 3D scene, is that almost everything can be approximated as a load of small, flat pieces (polygons) each joined at the edges, with a visible material applied to them. Computers generally use triangles, but you can also use quads or any n-gons if you feel so inclined. Each of the points which define the triangles can be expressed as a vector - 3 numbers, representing its X, Y and Z coordinates in space.

|

| Mostly triangles in there... |

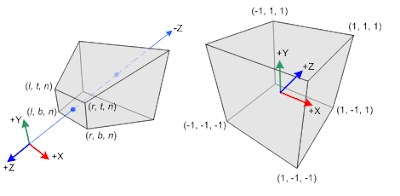

Unfortunately, our view on the world isn't quite as simple as this - if you imagine a simple cube, as described above, as seen face-on, each corner would be directly in front or behind of the rear face. In reality as we experience it, things which are further away appear smaller, so we shift points towards the centre of the screen depending on how deep they are. This now gives a pretty nice view of where each point on the model corresponds to on the screen - you can say that each point has been projected onto the 2D screen.

Once you have your points as they would be positioned on the screen, you can then draw in the triangles themselves. The easiest way would be to stretch a texture onto each triangle - though even this can have some quirks, which fall way beyond the scope of this blog post. A much more interesting and mathematical thing you can do is break each triangle into pixels and work out the colour of each - this is known as shading and can be done incredibly fast on modern graphics hardware.

|

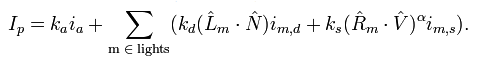

| All credit to Wikipedia for the image. |

The Phong model of lighting (named after the first person to describe it, Bui Tuong Phong) can be used to shade a variety of material types very effectively - it's typically used for plastics or non-transparent liquids - Gels in Portal 2 for example. The principle is that the overall light reflected from a surface can be thought of as the sum of several different components, each of which is seen above.

The ambient (emissive) light is a term considered to always exist, but is often excluded because it is not strictly necessary. The diffuse (scattered) light is reflected in all roughly all directions by the material and does not depend on the viewing direction, only on the lighting direction. The specular (highlight) light is the intense highlight reflection you may see off shiny surfaces. Together, they can be summed up to give a pretty good representation of a material as a whole.

|

| Restrain yourself, Jamie! |

|

| Not personally a fan of the cave missions, but they definitely looked good. |

Confused? Now remember that the computer handles about 100,000 vertices, depending on the game, and 1,000,000 pixels, 50 times a second, just in graphics calculations for a modern game.

Things I Haven't Mentioned

What about the other side of objects?

Sneaky trick with how you choose the triangles - you number the vertices in an anti-clockwise order around each polygon. When you're about to draw it, check if the order is still anticlockwise. If it is, you know it's facing you and should be drawn. If not, you're either looking at it sideways-on or from behind - which probably means you shouldn't be drawing it.

What about things behind you?

In the part where you move things with respect to the camera, things behind you end up getting a different sign on the depth to everything in front of you. You can just throw out any vertices with depth values below zero.

Anything else?

There's probably a solution or an explanation, but I'm really not going to pre-empt anything and everything you could ask. Ask in the comments, or wait for future installments, if this was actually interesting.

Yours geekily,

Charlie

Alright,

ReplyDeleteAnother amazing post though I wish I instantly understood it all; computer technology is slightly out of my depth.I'm not going to bother going into so much depth about how interesting this post was because I don't think I have the words. But basically my hypothesis is in 20 years you will be like this guy, well in the same kinda position except like computer technology.

http://www.badscience.net/about-dr-ben-goldacre/

At least if you don't end up like Bill Gates with your own technology and billions. For some reason I imagine you going far with this and touring and doing talks, and this is the guy that I think most resembles this.

Just a passing thought though, If you still have this blog in 20 years, you can look back on this comment and smile that I was the first person to predict your success. ;p